The Top 6 Legal Considerations for Licensing AI Software

How to License AI: a Lawyer’s Perspective

In the last year, I have seen all sorts of licensing agreements with respect to AI. From a complete ban on a vendor using GenAI all the way to a very sophisticated description of the vendor’s model training practices, including a distinction in ownership of base models and custom models. It got me thinking that the world of licensing AI is really the wild, wild west that could benefit from someone sitting down and working it all out.

So here goes,

Why AI license terms are important:

Let’s start with the Samsung situation that has petrified every corporate executive since it happened. In early 2023, a Samsung employee input proprietary source code belonging to Samsung into ChatGPT. At the time,ChatGPT’s terms clearly stated that they used input data for training their models. However, who reads terms of service?

Oops.

Why does training a model with sensitive data cause a problem? Because as the term “training” implies, you are teaching the model with that data. It will always and forever remember that data as part of its training and that data can absolutely become the output. ChatGPT is a public model that is available to anyone and everyone with a subscription, that means the model’s shared output may contain your proprietary input data. There has been speculation that the Samsung code may have been output to thousands of developers since early 2023 but there is no real proof. If that ChatGPT model had been private to Samsung, this leak would not have occurred.

In this article, I will explore the difference between licenses you are granting, and licenses you are receiving as a user of AI models.

Processing vs training data:

Inputs or “prompts” includes the data you pass through the model for it to do its thing, whether that is predictions, inference or similarity, etc. We used to call this processing data (still do actually). But input data is not necessarily training data. Right now, we associate prompting with chatting to a chat bot. The AI model is taking your data to perform a function, which means the AI provider needs a license to process your input data and you need a license to use the model to perform that function. For input data to become training data, there would need to be a different license from you to the AI provider allowing the AI provider to have a perpetual use of that input.

Technically, any data used for training is distinguishable from input data. I like to analogize training data to being transformed into something similar to code. In order for the AI model to read it, it cannot be in plain text format. For this reason, any AI provider wanting to use your data for training needs a license from you that includes the right to use, manipulate, transform or make derivatives of the input or output data.

Public model licensing:

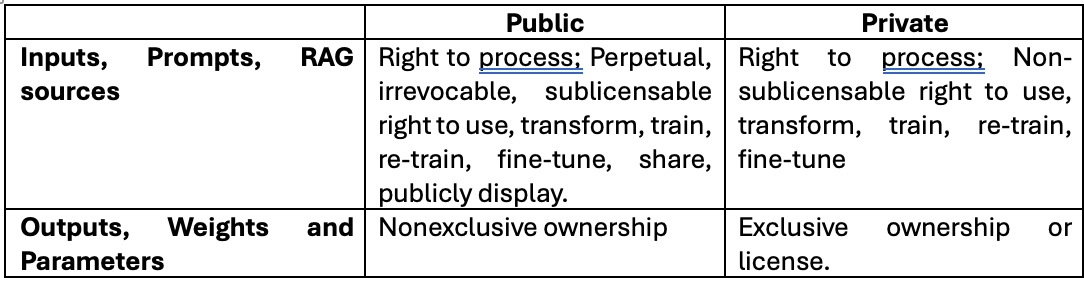

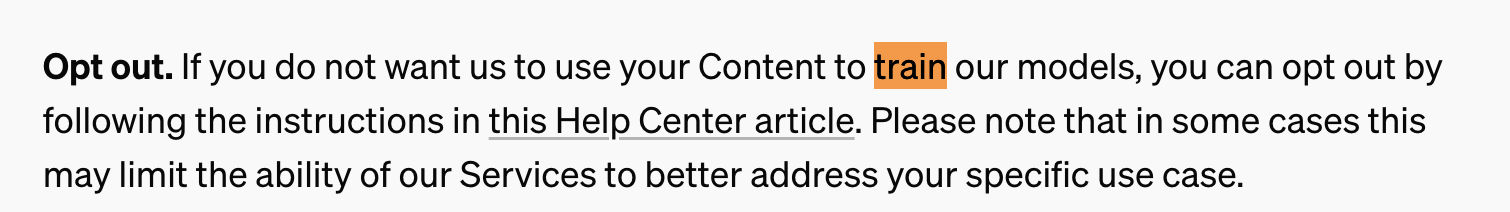

Just because you give an AI provider a license to use your input data for training, it does not mean that you are allowing that AI provider to share it as output with everyone. It depends on whether the AI system is public or private. A public model means that input and output data may be shared with consumers anywhere and everywhere. Within the realm of public models, there are two types of licensing inputs and outputs: opt in and opt out. To date, the free version of ChatGPT contains rights to use your inputs and outputs for training unless you optout. Claude, an Anthropic LLM, uses an opt in model seeking your consent to use your data for training through a feedback “button” (the thumbs up, thumbs down). Thus, there is an opt in and an opt out approach to getting your consent to use your data for training a public AI model.

Note: Content is defined by OpenAI as your input and output.

Regardless of the opt in or optout approach, I mentioned above that the license to training data needs to include the right to transform it, but, for public models, it would also need a“perpetual, irrevocable, sublicensable license to use your data for public display.” (quick drafting note: it could be a “right to sublicense” if the right is derived from the original license, rather than the licensor) Perpetual and irrevocable because once a model is trained, it cannot forget that training data, as mentioned in the Samsung example above. Sublicensable so the AI public model provider can share your input and output data with everyone else using the public model.

The copyright issues of owning outputs:

Here is another important distinction.You own your inputs because you are human and generated them, right? Let’s go with that and say, so far, copyright law has established that you own the inputs so you are the only person who can license them, whether for training or processing. Okay, what about outputs? Outputs, on the other hand, are machine generated and in the United States the copyright protection is opaque for machine generated content. This is interesting. Given the state of the US law, when OpenAI, or Google or Anthropic grant you ownership of the outputs, can they actually do that? If no one can own it, how can they grant you an ownership right?

But they do it anyway. Why? Well probably because it looks good. Or because they know once this concept is fully litigated, that granting you a right to use the outputs of their models will make for a smooth landing. But, it’s a right that you share with everyone else using the same public model, even if someone else gets the same output.

The copyright ownership issue varies by country and because of this, I am not going to explore it any further in this article other than to say, the default for AI licensing in public models is to grant non-exclusive ownership to the user of any output.

To make this even more confusing, this is also why the AI model provider needs a license to your output for training.

Diving deeper into the “training data” license

Training, re-training and fine-tuning:

In my conversations with people, what they are most concerned about is an AI provider using their data for training. But not everyone completely understands the different types of training that can be used on their data and the state of licensing currently does not do any better. Training an original model, re-training a model with either more data or better data to create the next iteration of that model, and fine-tuning a model are different processes and, in my opinion, worth making a distinction in a contract.

Interestingly, most licenses I have reviewed do not seek permission for re-training a model. However, you can almost guarantee that re-training is happening where the license includes the words “train.” I believe the assumption is that training and re-training are the same thing. However, my opinion is that they are not the same because a re-trained model may be for an entirely new purpose that you did not intend or consent to and the licenses need to be clear on this point.

And the same goes for fine-tuning. What is fine-tuning? I am glad you asked. I probably will not do it the proper justice, so I will just go ahead and link to a great article that I found explaining model weights and the adjustments machine learning engineers make to improve model behavior and predictions or inferences. But the point is that fine-tuning is using data to improve an existing model, perhaps make it more expert at a certain task. Another way I think about it is, re-training is building a whole new iteration of a model, so think GPT-3 to GPT-4, whereas fine-tuning is tweaking an existing model using custom data so it performs better at a specific task. The intention of both is to improve performance but a re-trained model continues to drive forward with the same purposes and a fine-tuned model veered to the left.

I have rarely seen a license agreement for AI address fine-tuning. In fact, the only time it is mentioned in OpenAI’s API terms is with regards to indemnity, where OpenAI excludes its indemnity obligations to the user if the user uses its own data to fine-tune an OpenAI model. They will not indemnify you for that fine-tuned model. That makes sense because OpenAI has no control over the data you are using to fine-tune, but, wow. So if you are liable for an OpenAI model that you fine-tuned, does that mean you own it?

I think it is implied in the OpenAI API terms but that’s not good enough for me. It should be explicit and I have yet to see any contract explicitly address the license or ownership to a fine-tuned model. If you fine-tuned a model, then it should become a private model to you and subject to your exclusive license or ownership.

RAG:

I have to touch on one last type of license, specific to LLMs, and that is retrieval augmented generation or “RAG. ”RAG is telling the general LLM to use specific sources when generating its response. In my humble opinion, this is just processing. RAG data is not “remembered” by the LLM i.e. it is not a method of training, re-training or fine-tuning an AI model. Instead, it is telling the LLM that, when it is crafting its output, to use this specific source(s) in generating a response. An augmented prompt, for lack of a better description. (I created a custom GPT using the EU AI Act and this article for you to try it out) Any AI provider requires a license to the sources in order for you to use this technique, but it should be revocable, you should be able to delete your source data at any time and these sources should not be used for training, re-training or fine-tuning. That is why sources (we could also call them RAG inputs) cannot be lumped into a general definition of your data unless the license to your data excludes training, re-training and fine-tuning. Except, of course, if that AI provider also wants to use your RAG sources for training, re-training or fine-tuning.

Private model licensing:

At the beginning of this article, I mentioned private or custom models. A private AI model means that you want the model trained, re-trained or fine-tuned on your data and kept solely for your use. You might infer from that statement that you should own the model, but it is dependent on the situation. If you use the services of a provider to train or re-train a custom or private model, you definitely want ownership of the weights or parameters, but you may not necessarily need or want ownership of the model. If they are running the model for you and have the expertise to build it in the first place, then you might want them to host it and manage it. In that case, maybe you don’t care so much about ownership vs an exclusive license (you don’t need it but you don’t want anyone else to have it either). If you fine-tuned a model, then presumably it should be owned by you and, again, that means you should own the weights and parameters, even if it is conceivable that another company has the exact same data and fine-tunes a model with the same resulting weights and parameters. Owning the weights or parameters is your way of saying that this specific model is exclusive to me.

Conclusion

As our interactions with AI continues to evolve, I am confident the licensing will become less oblique, but for now, read the terms of service and make sure that you are giving the license that you intended to give, and that you are getting the license you need.

And, please, don’t be afraid that what happened to Samsung will happen to you.